The new State-Of-The-Art models by Ultralytics, a complete review

Written by Henry Navarro

Introduction 🎯

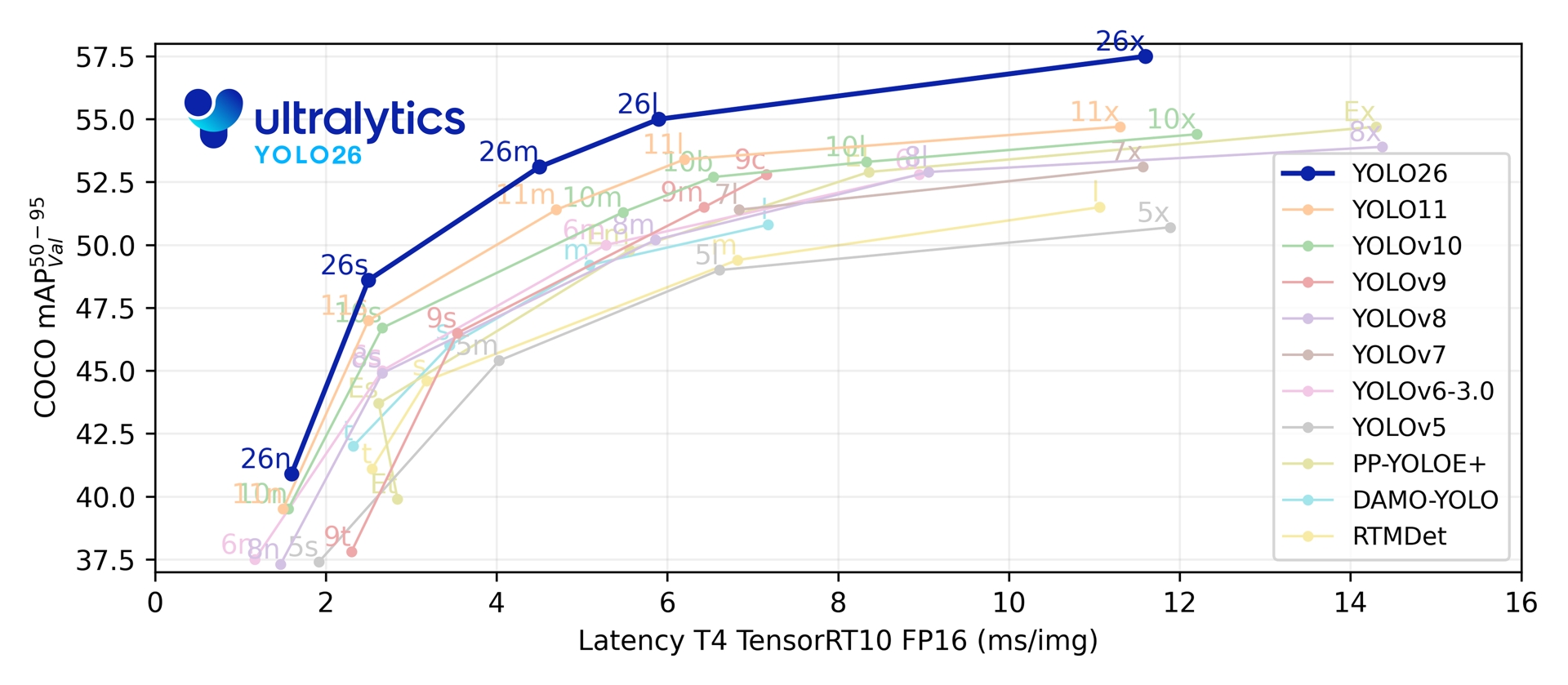

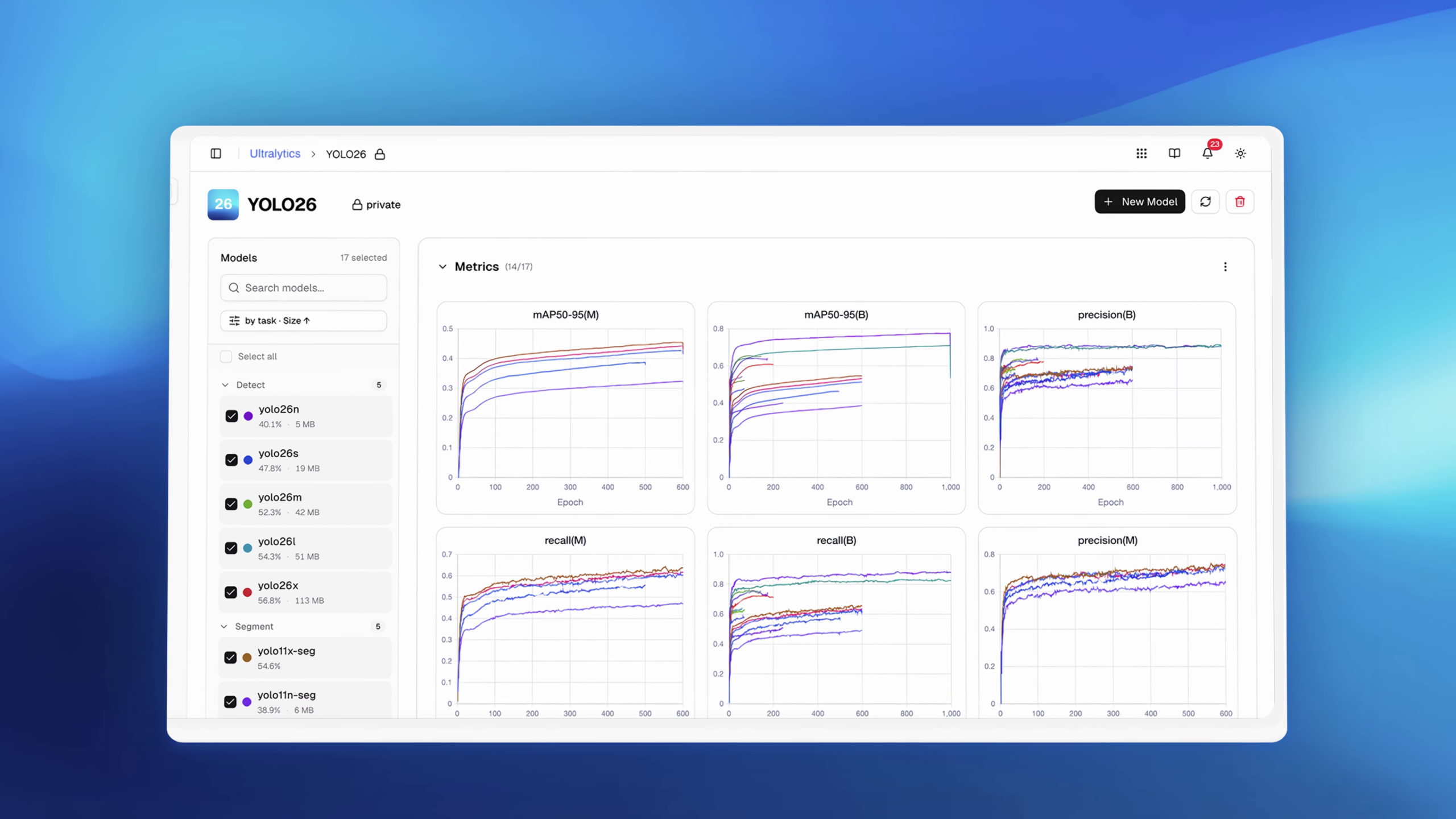

Ultralytics YOLO26 represents a major leap forward in modern computer vision, since the release of YOLOv5 they have become the referenced company in computer vision. In 2023, with the release of YOLOv8 a new framework called ultralytics come with this model. Now they have introduced YOLO26 (the number now comes because the release year) and with the mentioned ultralytics package, you get a single, unified framework that can perform object detection, instance segmentation, pose estimation, image classification, and oriented bounding boxes (OBB), and even segment objects using natural language prompts. For enterprises and teams building real‑world AI systems, Ultralytics YOLO26 is not just a model; it is a production‑ready framework.

At NeuralNet Solutions, we work with organizations to train, optimize, deploy, and scale Ultralytics YOLO26 across cloud, edge, and embedded devices. In this article, we explain what makes YOLO26 special, how it works in practice, and how businesses can turn it into real value.

Why Ultralytics YOLO26 Is a Game Changer

YOLO26 consolidates multiple computer vision tasks into a single ecosystem:

- ✅ Object detection (bounding boxes)

- ✅ Instance segmentation (pixel‑level precision)

- ✅ Pose estimation (human keypoints)

- ✅ Image classification

- ✅ Oriented Bounding Boxes (OBB) for aerial and satellite imagery

- ✅ Text‑prompted segmentation with YOLO‑E

👉 Explore the official documentation here

All of this is available as open‑source software (AGPL) and can be used locally, on‑premise, or through the official Ultralytics platform.

👉 Explore the official platform here

Model Sizes and Deployment Scenarios

As with previous Ultralytics releases, YOLO26 offers five model sizes for every task:

- Nano (n) – Ultra‑lightweight models for mobile and low‑power devices

- Small (s) – Optimized for real‑time edge inference

- Medium (m) – Balanced accuracy and performance

- Large (l) – High accuracy for demanding applications

- Extra‑Large (x) – Maximum precision for research and enterprise workloads

This flexibility allows us to deploy YOLO26 on:

- Mobile devices

- NVIDIA Jetson platforms

- Edge accelerators

- Cloud GPUs

- High‑performance on‑premise servers

Getting Started with Ultralytics YOLO26 🛠️

To work with YOLO26 locally, we recommend using a Python virtual environment and installing the latest Ultralytics package, following the official Ultralytics documentation.

pip install --upgrade ultralyticsThe official Ultralytics documentation provides complete guidance for installation, training, inference, and deployment, you can access here

From the documentation, you can run inference using either Python code or a simple CLI command, making YOLO26 accessible to both researchers and production engineers.

Predict on Videos, Images, or Cameras Using YOLO26 📹

Object detection remains one of the most widely used tasks in computer vision. With YOLO26, detection is fast, accurate, and easy to integrate.

Python 🐍

from ultralytics import YOLO

# Load a pretrained YOLO26 detection model

model = YOLO("yolo26x.pt")

# Run from different sources

results = model(source=0, show=True, half=True) # predict on your webcam

results = model(source="video.mp4", show=True, half=True) # predict on video

results = model(source="rtsp://user:password@ipcamera:portcamera", show=True, half=True) # predict on rtsp camera

results = model(source="image.jpg", show=True, half=True) # predict on imageCLI 🤓

yolo predict detect source=0 show=True half=True model=yolo26x.ptCommon enterprise use cases:

- Smart cities (traffic and pedestrian analysis)

- Agriculture (crop and livestock monitoring)

- Retail analytics

- Security and surveillance

Instance Segmentation: Pixel‑Level Precision ✂️

Segmentation takes detection a step further by identifying each object at the pixel level. YOLO26 segmentation models are ideal for applications requiring extreme precision.

Python 🐍

from ultralytics import YOLO

# Load a pretrained YOLO26 segmentation model

model = YOLO("yolo26x-seg.pt")

# Run real-time segmentation from webcam

results = model(source=2, show=True, half=True, retina_masks=True)CLI 🤓

yolo predict segment source=2 show=True half=True retina_masks=True model=yolo26x-seg.ptApplications include:

- Livestock counting

- Medical imaging support

- Real estate and interior analysis

- Industrial inspection

Pose Estimation with Ultralytics YOLO26 💃

Pose estimation models detect human keypoints such as arms, legs, face, and torso. YOLO26 pose estimation enables detailed human motion analysis. CLI 🤓

yolo predict pose source=2 show=True half=True model=yolo26x-pose.ptPython 🐍

from ultralytics import YOLO

# Load a pretrained YOLO26 pose estimation model

model = YOLO("yolo26x-pose.pt")

# Run real-time pose estimation from webcam

results = model(source=2, show=True, half=True)Use cases:

- Sports and fitness analytics

- Workplace safety

- Human behavior analysis

- Social media and content creation

Oriented Bounding Boxes (OBB) for Aerial Vision 📦

YOLO26 includes OBB models designed for aerial and satellite imagery, where objects are often rotated.

Python 🐍

from ultralytics import YOLO

# Load a pretrained YOLO26 pose estimation model

model = YOLO("yolo26x-pose.pt")

# Run real-time pose estimation from webcam

results = model(source=2, show=True, half=True)CLI 🤓

yolo predict obb model=yolo26x-obb.pt source=aerial.mp4 show=TrueThis capability is critical for:

- Satellite imagery analysis

- Urban planning

- Maritime and port monitoring

- Defense and geospatial intelligence

YOLO‑E: Text‑Prompted Segmentation 🤯

One of the most innovative features in YOLO26 is YOLOE, which allows segmentation using natural language prompts.

CLI 🤓

yolo predict model=yoloe-26x-seg.pt source=video_examples/yoloe/5206818-hd_1920_1080_25fps.mp4 show=True half=True retina_masks=True classes="brown horse" conf=0.4With a simple text prompt like “brown horse” or “white horse”, YOLO‑E selectively segments only the objects you request. For other modalitites check Ultralytics documentation

This is incredibly powerful for:

- Dataset pre‑annotation

- Rapid dataset creation

- Semi‑automatic labeling workflows

Conclusion 🎯

YOLO26 marks a significant milestone in accessible, production-ready computer vision. By consolidating object detection, instance segmentation, pose estimation, OBB, and text-prompted segmentation into a single, unified framework, YOLO26 eliminates the complexity of managing multiple tools and models. Whether you’re running inference on a mobile device, an edge accelerator, or a cloud GPU cluster, YOLO26 delivers state-of-the-art performance with remarkable flexibility.

For researchers and hobbyists, YOLO26 provides an easy entry point with simple CLI commands and intuitive Python APIs. For enterprises, it represents a strategic advantage the ability to deploy advanced AI vision systems faster, more reliably, and at scale.

However, moving from experimentation to production requires more than just a powerful model. It demands careful dataset preparation, domain-specific fine-tuning, optimization for your target hardware, and robust deployment infrastructure. That’s where expert guidance becomes invaluable.

From Prototype to Production 🚀

YOLO26 is easy to experiment with, production deployment requires expertise:

- Custom dataset preparation and labeling

- Model training and fine‑tuning

- Performance optimization (latency, memory, throughput)

- Deployment on edge, cloud, or hybrid infrastructures

- Monitoring, updates, and lifecycle management

This is where we come in.

Professional Computer Vision for Enterprise 💼

At NeuralNet Solutions, we specialize in enterprise‑grade YOLO26 solutions:

✅ Custom YOLO26 model training

✅ Advanced segmentation and pose pipelines

✅ Edge deployment (NVIDIA Jetson, embedded devices)

✅ Cloud and on‑premise scaling

✅ Dataset creation and YOLO‑E assisted annotation

✅ End‑to‑end production systems

You can build a proof of concept or scale to millions of inferences per day, we help you deploy YOLO26 correctly and efficiently.

If you want to train, deploy, or scale YOLO26 for your business, let’s talk.

👉 Schedule a free 30‑minute consultation

https://cal.com/henry-neuralnet/30min

🌐 Website: https://neuralnet.solutions

💼 LinkedIn: https://www.linkedin.com/in/henrymlearning/

The companies adopting advanced computer vision today will lead their industries tomorrow.

#UltralyticsYOLO26 #ComputerVision #YOLO #OpenSourceAI #EdgeAI #NeuralNetSolutions